Bio

Hi! My name is David Knigge, I'm a third year PhD student in Artificial Intelligence at the University of Amsterdam's Video Image Sense Lab. My research interests include continuous processing of discrete signals (e.g. Continuous Kernel Convolutions [1, 2], Neural Fields[3]), and efficient deep learning, for example through incorporating equivariances.

Recently I've been interested in diffusion models and the use of DL for continuous-time solve dynamical systems. Together with some colleagues, I'm currently trying to apply an MAE approach to learn continuous 3D scene representations!

I'm currently researching geometric approaches to robotics at New Theory in San Francisco, always up for a coffee!

In my spare time I love seeing sci-fi classics in the cinema and trying to make stuff with my hands.

If you would like to chat about any research related topic, feel free to hit me up over email or on twitter!

Selected Research

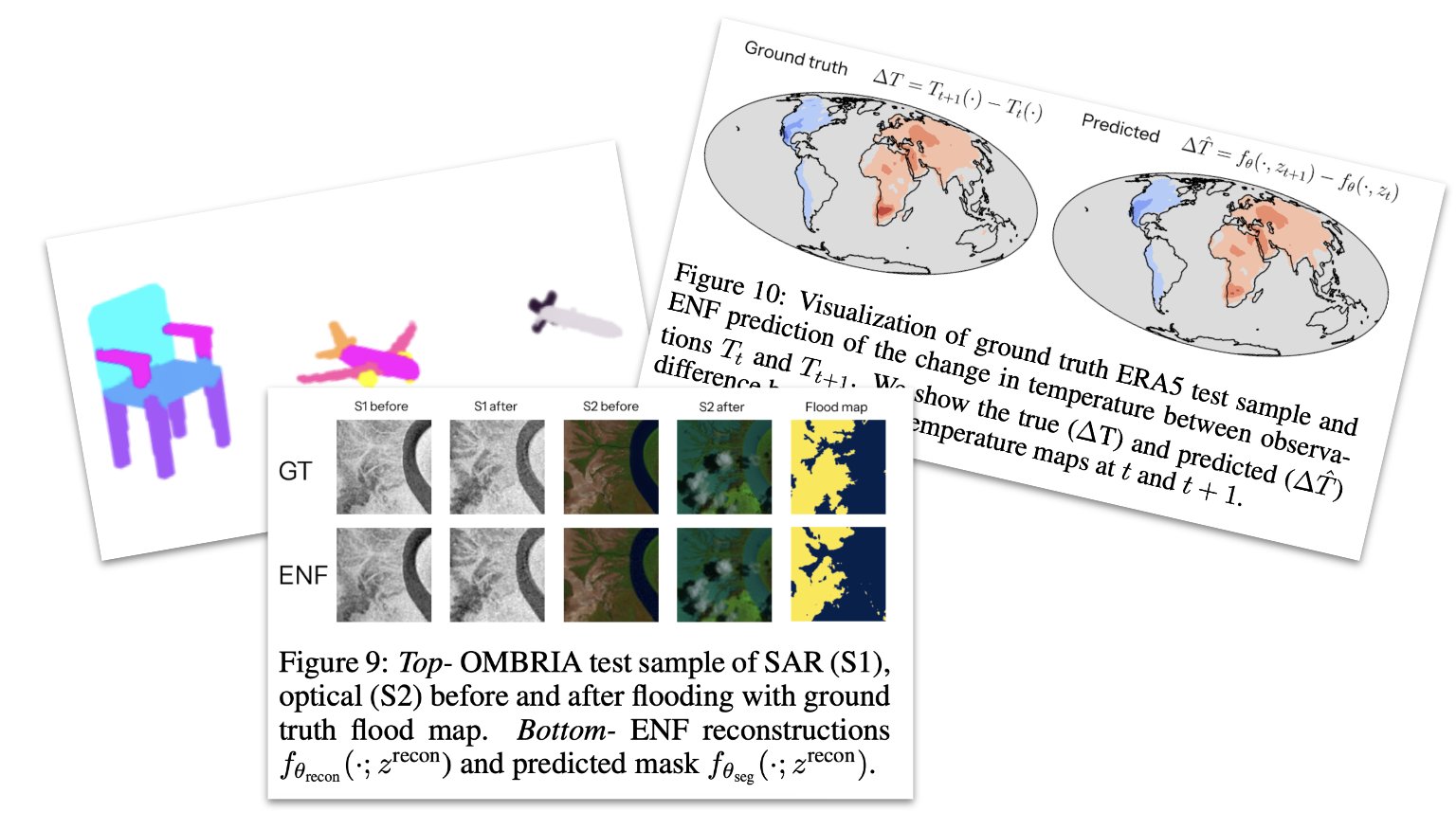

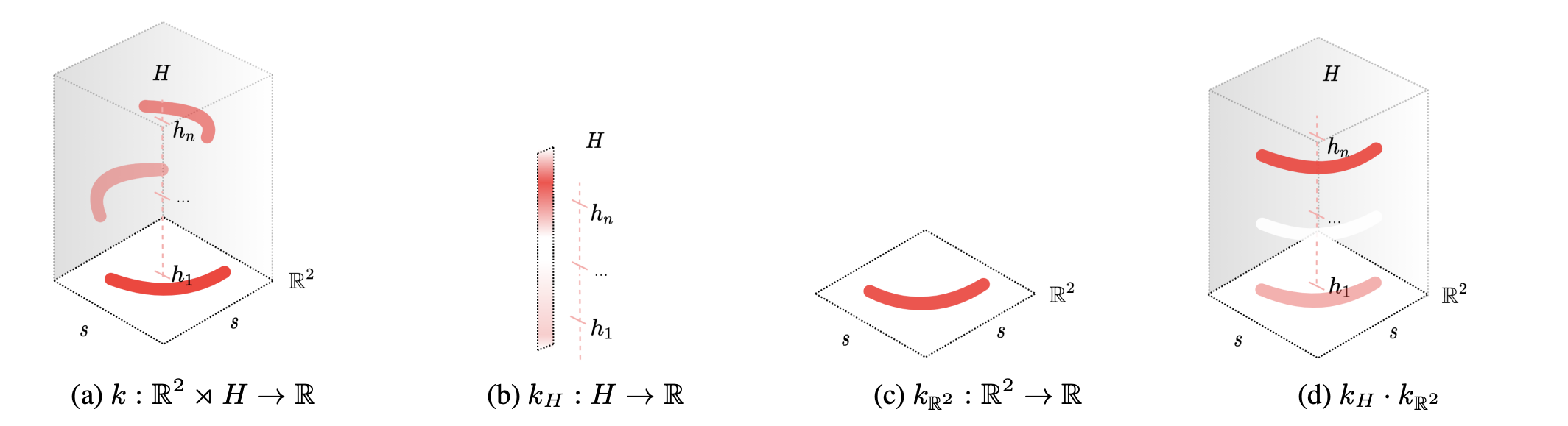

Space-Time Continuous PDE Forecasting using Equivariant Neural Fields

David Knigge*, David Wessels*, Riccardo Valperga, Samuele Papa, Jan-Jakob Sonke, Efstratios Gavves, Erik J. Bekkers

NeurIPS 2024

Building on recent work using neural fields as representation for PDE solving, we investigate how to incorporate symmetries that often occur in physical data into a framework for continuous PDE solving by using Equivariant Neural Fields. We obtain impressive performance increases, especially over complicated geometries, which we attribute to the marked reduction in modelling complexity when respecting a PDE's symmetries.

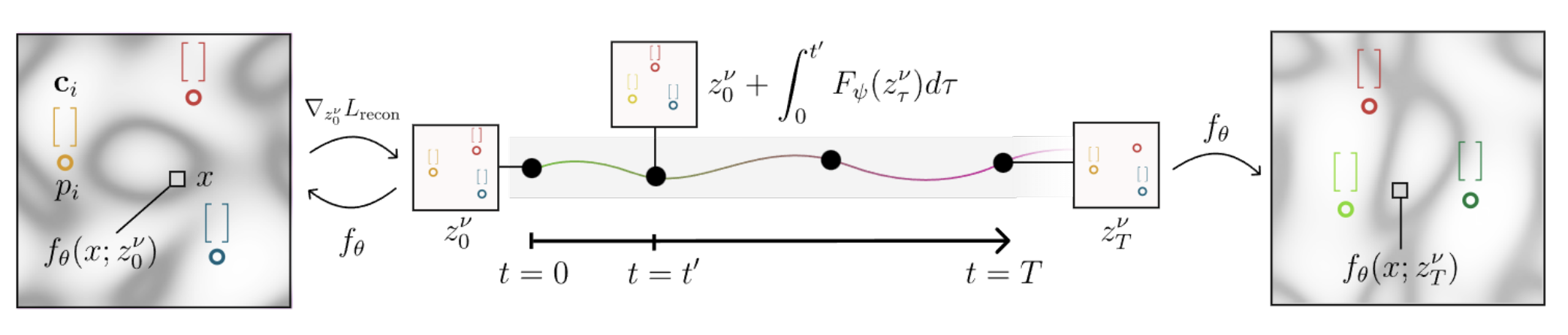

Grounding Continuous Representations in Geometry: Equivariant Neural Fields

David Wessels*, David Knigge*, Samuele Papa, Riccardo Valperga, Sharvaree Vadgama, Efstratios Gavves, Erik J Bekkers

Under review

We introduce a novel class of conditional neural fields that preserve relevant geometric properties of the data: transformation symmetries and locality. To this end, we propose a novel attention-based conditioning method, which disentangles a continuous signal into a set of pose-appearance latents. We show that this method improves usability of neural fields as signal representation - both in downstream tasks and reconstruction.

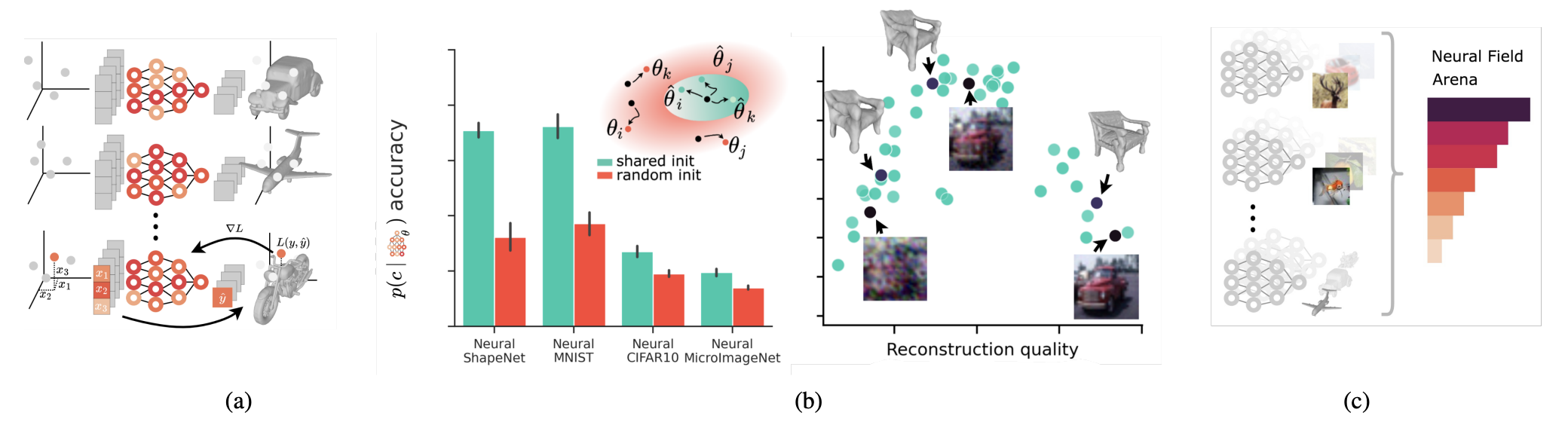

How to Train Neural Field Representations: A Comprehensive Study and Benchmark

Samuele Papa, Riccardo Valperga, David Knigge, Miltiadis Kofinas, Phillip Lippe, Jan-Jakob Sonke, Efstratios Gavves

CVPR 2024

In this work we investigate the recently proposed use of neural fields as signal representations. In this setting, the parameters of a neural field are used as features on which downstream tasks are learned (e.g. classification). We investigate how neural field hyperparameter choices impact downstream performance, and propose a benchmark of neural field variants of classic CV datasets to encourage further development in this direction.

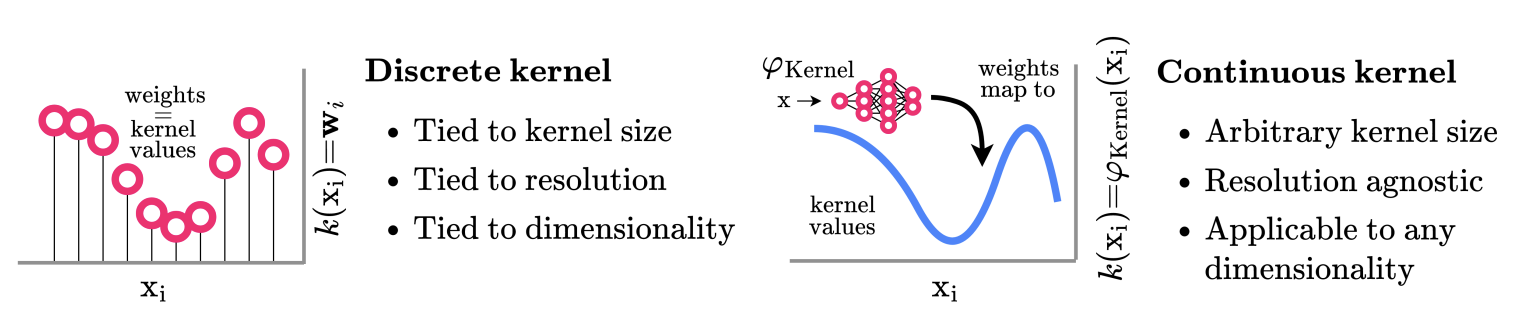

Modelling Long Range Dependencies in ND: From Task-Specific to a General Purpose CNNs

David M. Knigge*, David W. Romero*, Albert F. Gu, Efstratios Gavves, Erik J. Bekkers, Jakub M. Tomczak, Jan-Jakob Sonke

ICLR 2023

Do kernel sizes in CNNs seem somewhat arbitrary to you as well? We wanted to devise a CNN architecture that does not require making a particular choice of kernel size when when applying to a new dataset. In fact, for this work, we wanted to do away with as many of the conventional CNN hyperparameters as possible. We approach this by using continuous functions to parameterize the convolutional kernels, making them independent of the input resolution of your data. In doing so, we are able to sample aribtrarily large kernels, which we show work particularly well on datasets with extremely long-range interactions (such as LRA!).

By using a small MLP that maps from relative kernel positions to kernel values (a) we're able to obtain kernels of arbitrary shapes and sizes. As such, we're able to apply the exact same architecture to (b) sequences, (c) images and (d) pointclouds, without any structural modifications.

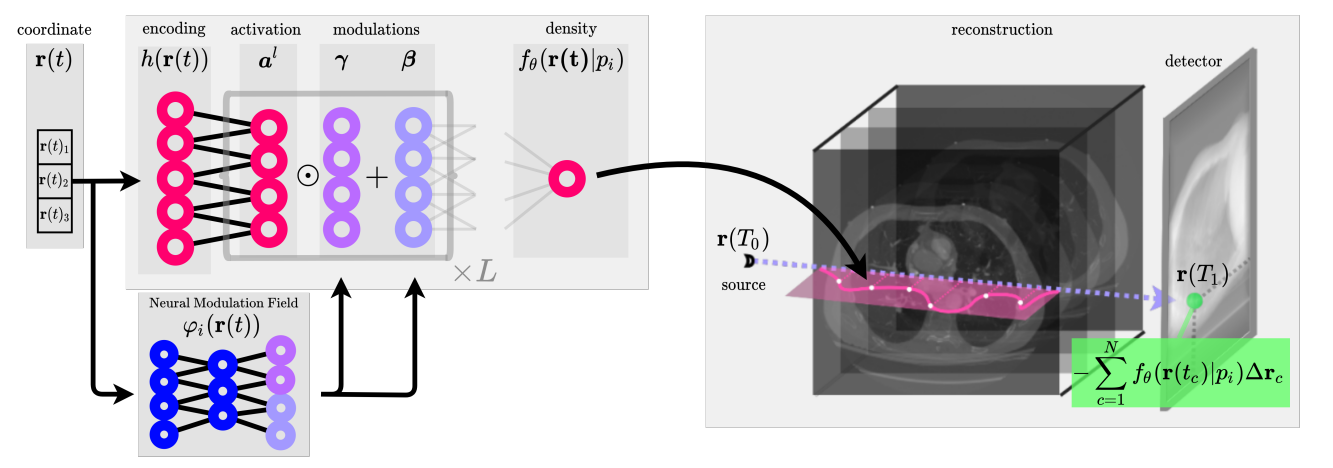

Neural Modulation Fields for Conditional Cone Beam Neural Tomography

Samuele Papa, David M. Knigge, Riccardo Valperga, Nikita Moriakov, Miltos Kofinas, Jan-Jakob Sonke, Efstratios Gavves

SynSML @ ICML2023

We propose a method to use conditional neural fields for Cone Beam Computed Tomography (CBCT) reconstruction. To improve the reconstruction quality under limited numbers of available projections and with noise present, we propose a more expressive conditioning method: the Neural Modulation Field. We model a field of conditioning variables over the domain of the reconstructed density by use of a second neural field specific to each patient. We show this method improves reconstruction quality!

Exploiting Redundancy: Separable Group Convolutional Networks on Lie Groups

David M. Knigge, David W. Romero, Erik J. Bekkers

ICML 2022

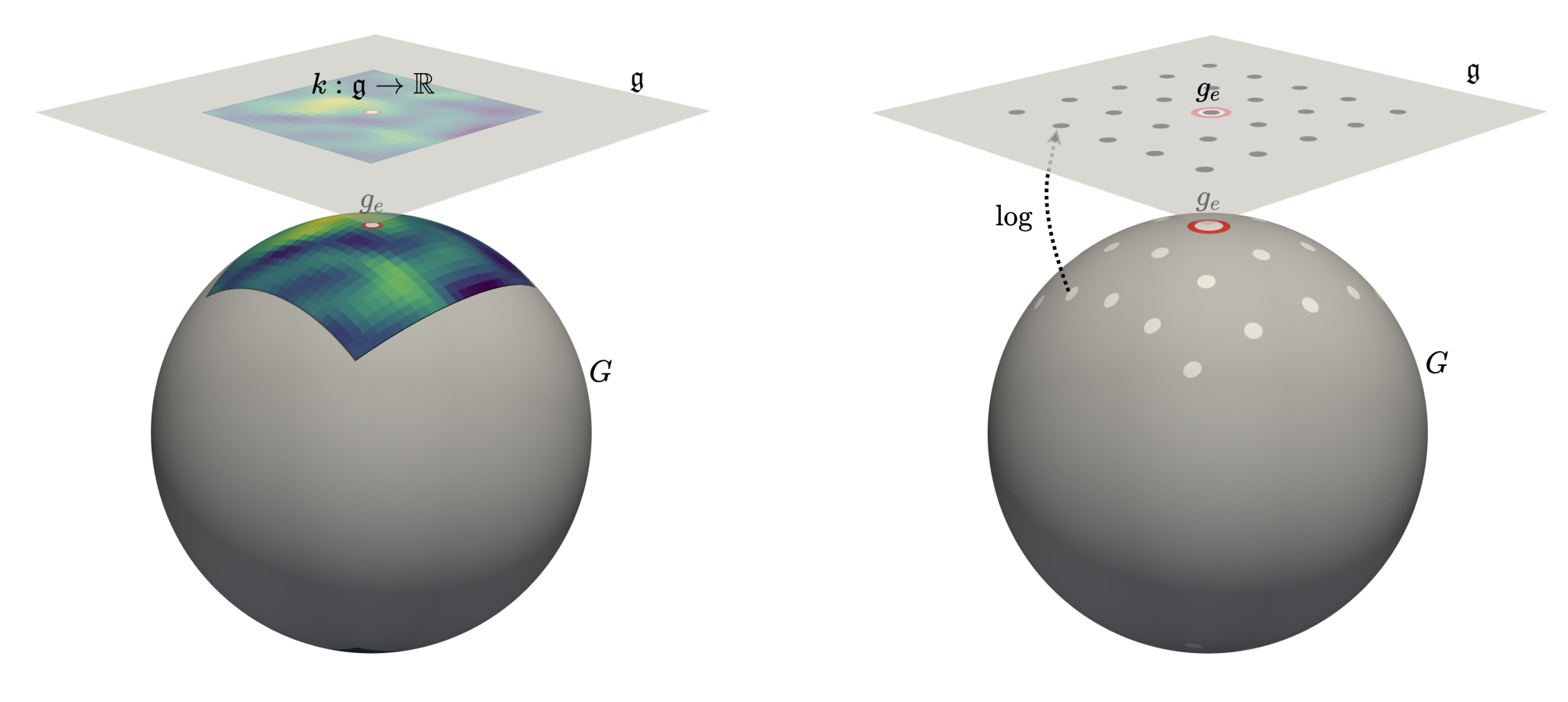

We aim to increase the efficiency of group equivariant convolutional networks by formulating separability of the group convolution operator (a) along subgroups (b,c). This lead to significant computational efficiency increases which in turn enabled application of group equivariant neural networks to new and larger groups.

To prevent major aliasing artefacts and obtain approximate equivariance to continuous groups (such as rotations), we expressed the convolutional kernel as a continuous function over the corresponding Lie algebra of a group through a small MLP. Kernels can be sampled for group elements by use of the corresponding logarithmic map.

Miscellaneous

Every now and then I help organise the UvA's Deep Thinking Hour, an ELLIS supported initiative where researchers in AI and DL are invited to discuss their ongoing research. For example, we've hosted an exciting panel discussion on the seeming trade-off between Scale and Modelling in modern Deep Learning research.

In the past, I also helped organise the UvA's Geometric Deep Learning Seminar, which specifically focused on highlighting research in the field of Geometric Deep Learning. Find some of our recorded talks on youtube. We might pick this up in the near future!

This website was last updated 10-10-2024 at 14:17.